“Life mostly happens to sustain life, so living things care about what happens. The computer, not alive and not designed by evolution, doesn’t care about survival or reproduction — in fact, it doesn’t care about anything. Computers aren’t dangerous in the way snakes or hired killers are dangerous. Although many movies explore horror fantasies of computers turning malicious, real computers lack the capacity for malice.”

~ Roy Baumeister from “What to Think About Machines that Think (2015)

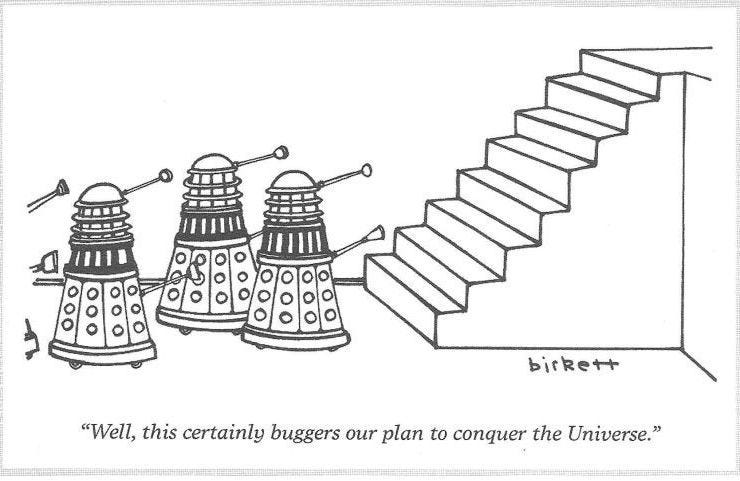

from Punch Magazine sometime in the early 1980s

Before I disappear down my AI rabbit hole I would like to thank the many subscribers who signed up for a paid subscription over the past week as we made good on our promise to erect a paywall on Pitchfork Papers and to launch Pitchfork Press on our third anniversary. I am truly grateful for the encouragement and support that those subscriptions denote and am doubly enthusiastic about the writing and thinking journey ahead.

Thank you.

Steven

If I needed a single meme to describe my perspective on the subject of fear of technology, it would be Birkett’s “Dalek” cartoon - one of my favourites - published in Punch magazine in its glorious heyday when Alan Coren was Editor in the 1980s. I love it for its irreverence and for its perception. (And while we are on the subject of favourite Punch cartoons that have stayed with me and still make me smile 40 years on here is my second favourite..)

from Punch Magazine sometime around Dec 1980

There appears to be a feeding frenzy of hand-wringing and apocalyptic self-flagellation on the subject of AI and the threat it poses to humanity’s very existence, now that GPT4, Chat_GPT4 (or is it 5?), Bing AI, and a host of other powerful data synthesizing technologies based on neuro linguistic programming algorithms are now available for broad consumption and characteristic misappropriation by the great unwashed - including me. Pretty much every Substack author worth his or her salt, has weighed in on the topic this week, giving me an excuse to sort out my own views on the subject in coram publico. And I will be the first to admit there have been some outstanding contributions on the topic of the threats, perceived and real, of the widespread availability of AI-based engines as well as of their uses.

Not that any of this is new: as a species, we have been grappling with the implications of technologies for our human condition since around 1802 when a German economics professor at Göttingen University - Johann Beckmann - first resurrected the term “technology” or Technologie to give it the word he used in the modern age. The term “techne logos” having been originally minted by Aristotle in his treatise on Rhetoric two thousand and some 400 years previously, was never been taken up as a concept or “thing” until Beckmann gave it a name and attempted to codify it.

The two primary questions that appear to be concentrating the minds both great and small are on the one hand the existential and philosophical question of whether AI “machines” are going to render us obsolete as a species (because they are faster, “know” more than anyone of us individually can and are developing human-like abilities to articulate and synthesize) and on the other, whether they are simultaneously but not necessarily connectedly to the first question, going to put us all out of work, rendering us economically surplus to requirements?

Good questions. Or at least good balls to be able to hook for six out of the park if you have a modicum of self-confidence in who we are as a species and what purpose an economy serves in our social organization.

Keep reading with a 7-day free trial

Subscribe to Pitchfork Papers to keep reading this post and get 7 days of free access to the full post archives.